- #DOWNLOAD SPARK 2.2.0 CORE JAR HOW TO#

- #DOWNLOAD SPARK 2.2.0 CORE JAR ZIP FILE#

- #DOWNLOAD SPARK 2.2.0 CORE JAR DRIVER#

You need to have both the Spark history server and the MapReduce history server running and configure in yarn-site.xml properly. The logs are also available on the Spark Web UI under the Executors Tab.

The directory where they are located can be found by looking at your YARN configs ( -app-log-dir and -app-log-dir-suffix). You can also view the container log files directly in HDFS using the HDFS shell or API. Will print out the contents of all log files from all containers from the given application. These logs can be viewed from anywhere on the cluster with the yarn logs command. If log aggregation is turned on (with the yarn.log-aggregation-enable config), container logs are copied to HDFS and deleted on the local machine. YARN has two modes for handling container logs after an application has completed. In YARN terminology, executors and application masters run inside “containers”. These are configs that are specific to Spark on YARN. See the configuration page for more information on those. Most of the configs are the same for Spark on YARN as for other deployment modes.

#DOWNLOAD SPARK 2.2.0 CORE JAR ZIP FILE#

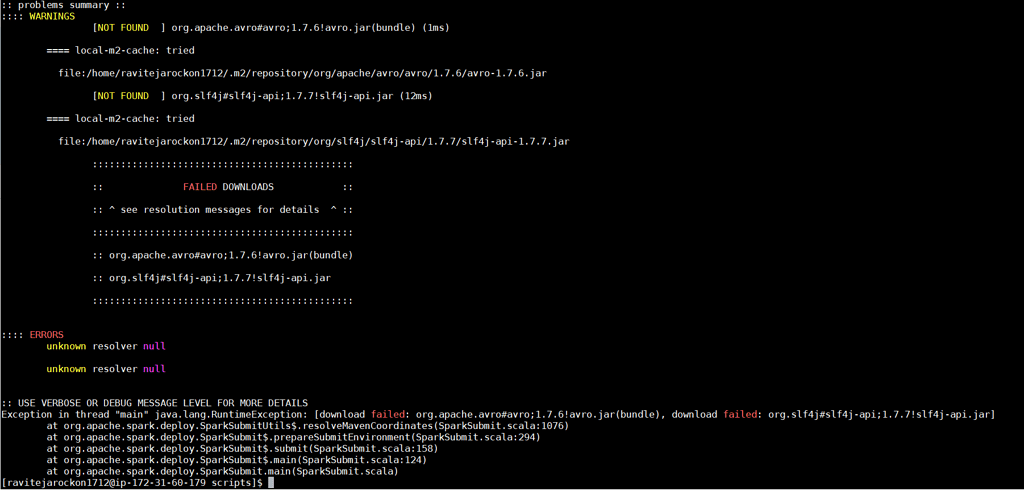

If neither nor is specified, Spark will create a zip file with all jars under $SPARK_HOME/jars and upload it to the distributed cache. For details please refer to Spark Properties. To make Spark runtime jars accessible from YARN side, you can specify or. To build Spark yourself, refer to Building Spark. Running Spark on YARN requires a binary distribution of Spark which is built with YARN support.īinary distributions can be downloaded from the downloads page of the project website. jars my-other-jar.jar,my-other-other-jar.jar \ To make files on the client available to SparkContext.addJar, include them with the -jars option in the launch command.

#DOWNLOAD SPARK 2.2.0 CORE JAR DRIVER#

In cluster mode, the driver runs on a different machine than the client, so SparkContext.addJar won’t work out of the box with files that are local to the client.

bin/spark-shell -master yarn -deploy-mode client The following shows how you can run spark-shell in client mode: $. To launch a Spark application in client mode, do the same, but replace cluster with client.

#DOWNLOAD SPARK 2.2.0 CORE JAR HOW TO#

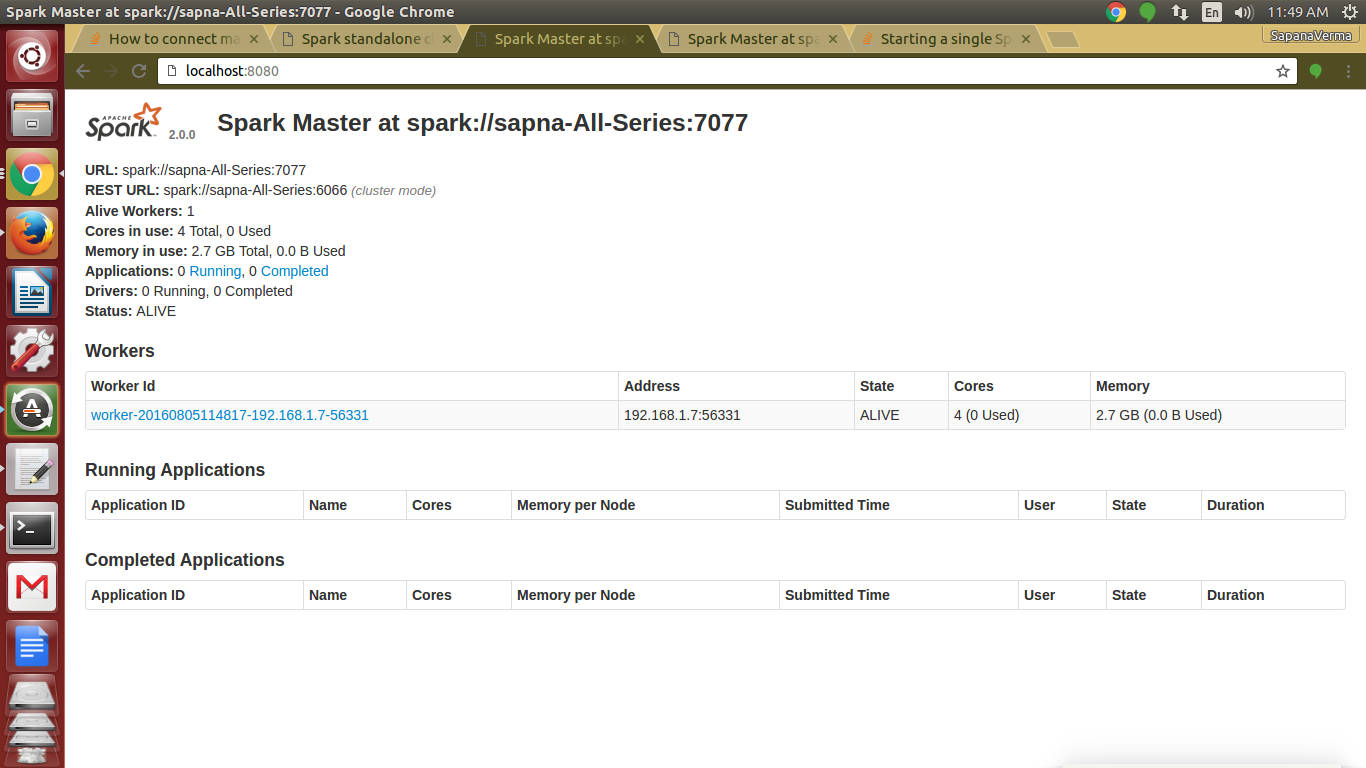

Refer to the “Debugging your Application” section below for how to see driver and executor logs. The client will exit once your application has finished running. The client will periodically poll the Application Master for status updates and display them in the console. Then SparkPi will be run as a child thread of Application Master. The above starts a YARN client program which starts the default Application Master. bin/spark-submit -class path.to.your.Class -master yarn -deploy-mode cluster įor example: $. To launch a Spark application in cluster mode: $. Unlike Spark standalone and Mesos modes, in which the master’s address is specified in the -master parameter, in YARN mode the ResourceManager’s address is picked up from the Hadoop configuration. In client mode, the driver runs in the client process, and the application master is only used for requesting resources from YARN. In cluster mode, the Spark driver runs inside an application master process which is managed by YARN on the cluster, and the client can go away after initiating the application. There are two deploy modes that can be used to launch Spark applications on YARN. Spark application’s configuration (driver, executors, and the AM when running in client mode). Java system properties or environment variables not managed by YARN, they should also be set in the TheĬonfiguration contained in this directory will be distributed to the YARN cluster so that allĬontainers used by the application use the same configuration. These configs are used to write to HDFS and connect to the YARN ResourceManager. Launching Spark on YARNĮnsure that HADOOP_CONF_DIR or YARN_CONF_DIR points to the directory which contains the (client side) configuration files for the Hadoop cluster. Was added to Spark in version 0.6.0, and improved in subsequent releases.

0 kommentar(er)

0 kommentar(er)